Over the last couple of decades, some terms have fallen out of use (or come to mean something new) while others have become more mainstream. This means that some terms now mean different things to different people, depending on their organisation’s level of data maturity. Some data-related terms are used interchangeably, making it difficult to know what someone’s really talking about.

Whether you’ve been in the industry for a while or you’re just starting out, it’s worth exploring the current differences between analytics, business intelligence, data science and machine learning. We’ve set some standard definitions to encourage more data professionals and businesses to get on the same page with terminology.

Defining Four Data-Focused Processes

Analytics, business intelligence, data science and machine learning are all data-focussed processes that attempt to draw value from data. Beyond that, they are distinct practices, so let’s define those differences.

Analytics

The text-book definition of analytics broadly covers the practice of discovering data, identifying patterns and interpreting it to uncover meaning. In practice, analytics has come to mean the reporting or displaying of statistical data in tables, graphs, charts or dashboards.

A traditional example of this might be using a tool like Microsoft Reporting Services to generate a sales report (with numbers and charts) that’s scheduled to go out via email each morning. From these analytics, you might be able to draw some conclusions that guide certain decisions in your business.

Some businesses have very mature analytics processes which do provide some rigour to validating the outputs reported, but it must be recognised that this rigour is not applied uniformly over industry (or often even within a business). Much of analytics in business is merely presenting raw data collected from applications back to the business in a visually appealing manner. This practice alone, doesn't really allow for the cleaning of data, or consider the bias or correctness of the data reported.

There is value in analytics as it raises interesting questions in the pursuit of meaning, but businesses should be cautious of how much confidence they place on basing their decisions on analytics alone. In many cases businesses will use their analytics as a starting point for understanding their data.

Business Intelligence

Business intelligence involves pulling data together from different sources and organising it to give you a more complete understanding. In business intelligence, data is modelled and to an extent ‘cleaned’ to attempt to fix issues with the raw source data (such as missing or incorrect values in fields). In modelling the data you can shape the data around answering business questions, such as customer demographics - for example; ‘how do sales of product X differ between different age brackets 20-35, 36-59, 60+?’.

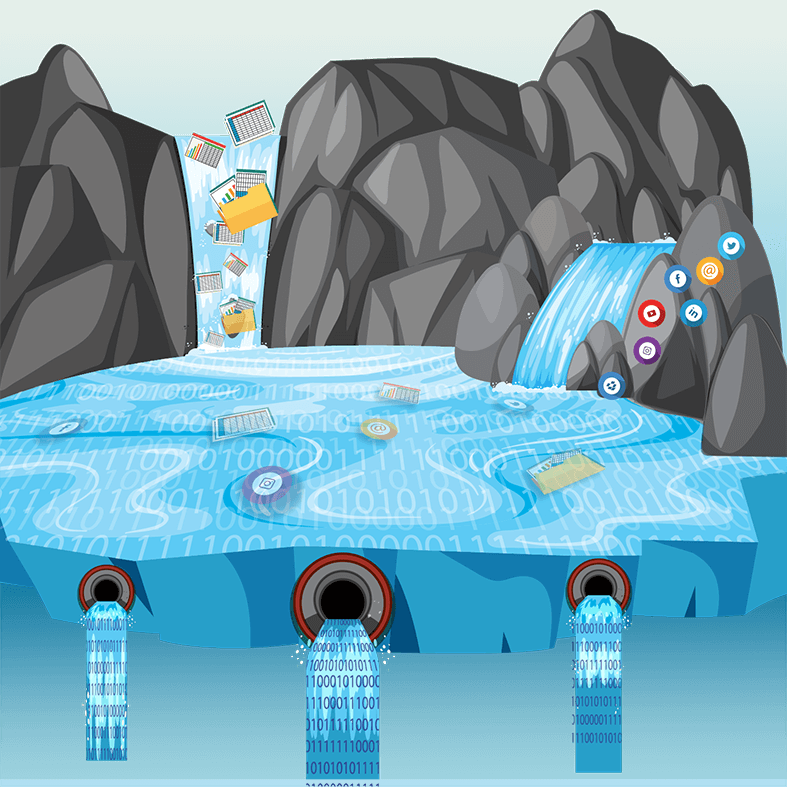

Tooling in business intelligence includes analytics tooling such as graphs, visualisations and online dashboards but also tools that help process and clean data (ETL) store data (data lakes) model data (data warehouses) and provide some degree of providing better access to data for ‘self-service’ reporting (where staff are able to interrogate data themselves, not just rely on pre-fabricated reports).

Through standardising data, business intelligence allows you to report over often disparate source systems in a common and consistent format. This is certainly an improvement over simply re-presenting raw data back from individual systems as simple analytics focusses.

Data Science

Data science is a relatively new (and currently on-trend) term. The key to understanding what makes this approach to data distinct from business intelligence is, well… science.

Compared to business intelligence, where data can be manipulated to create meaning, data science requires a rigid, scientific approach. This involves:

- - Making hypotheses about what you expect to find before you do it

- - Determining whether the results ended up being what you expected (and why or why not)

- - Using common, mathematical ways to look at the data

- - Never assuming your data is correct

- - Cleaning the data to remove errors, instead of just throwing it into a tool and crossing your fingers

- - Using a statistical approach (e.g. standard deviation) to remove outliers (which will skew the results)

- - Exploring ways of removing bias from the data

- - Experimenting with different approaches

- - Proving validity and correlation.

Data science seeks assurance that the insights, trends and correlations being used to make important business decisions are true, unbiased and accurate.

In the programming world, one popular data science tool is Jupyter Notebook. This software allows engineers to run data science experiments and write notes in the same place. Once they’re satisfied with the methodology and assumptions have been validated, the applied learning can be authored back for general consumption in the business through existing business intelligence processes and analytics. While the outcomes may look similar to business intelligence and analytics, the primary improvement that data science provides is confidence in the reliability of the results that can be inferred through methodical practices.

Machine Learning

Machine learning is in many ways, a more mature version of data science. It takes data science practices and combines it with the extreme compute power of modern computing and algorithms to make computer-generated predictions. This is achieved by training algorithms with prepared data to build models (a set of processes and calculations that make a prediction from a given input). These models are then evaluated to determine the model’s accuracy. A sufficiently accurate model can then be hosted to create inference and used in applications and big data projects.

An example of ML in practice can be seen in social media feed. ML algorithms are run on a pool of user behaviour data to make predictions that are used to tailor unique user experiences to each individual, with content and suggestions that match their interests.

Machine learning uses many of the same tools as data science, but engineers may also work with machine learning environments like Databricks and Amazon SageMaker that provide unique tooling to ‘Label’, ‘Feature Engineer’, ‘Train’, ‘Validate’, ‘Tune’, and ‘Host’ these solutions.

What’s Next in Your Data Journey?

When it comes to analytics vs business intelligence vs data science vs machine learning… where do you actually start? And which approach is right for your business?

It’s clear that these four data-focused processes represent an evolution of data for businesses. You could look at them as a four-step process:

Step 1: Analytics

Access your data and analytics across the metrics your business needs to monitor.

Step 2: Business intelligence

Unify your data sources and analytics into one place with business intelligence shaped on questions that drive your businesses success.

Step 3: Data science

Get more scientific with how you gather and analyse data so you can rely on the insights for critical decision making.

Step 4: Machine learning

Apply machine learning to build in predictions that support future-focused decision making and automation.

The best starting place (or the logical next step) will depend on how mature your company’s data practices are. A good rule of thumb is to figure out where you are now, master that, and then move onto the next step.

Skills and Training

As each process represents an evolution, it brings about an expanded ecosystem to support your data activities. Much like the changes in terminology we also see significant changes in the skills and job titles of staff who handle aspects of this ecosystem. In smaller companies many of these tasks may be handled multi-skilled individuals. Common roles seen today are;

- Database Administrator (DBA) - an expert in managing/operating traditional relational databases or warehouses

- DevOps Engineer - cloud expert who provision resources with code

- MLOps Engineer - ML cloud-services expert who provision ML processes and workflow with code

- DataOps/Data Engineer - Data centric expert who manages data governance, big data storage and provisions access to clean data with code

- Data Scientist - A data analyst who applies scientific method to derive insight from data

- BI Developer - A expert in the modelling and presentation of data

- Business Analyst - An expert in identifying and communicating the needs of business to determine areas of value.

Across these roles, there are dominant skills expected of staff today to deliver these roles. While SQL remains an important language for database query, Python has become the primary data language of choice for data projects due to the maturity of the available libraries/packages that can easily manipulate data. Automation through code is also a key aspect of a modern data platform to ensure rapid iterative development of data solutions can be delivered to the business.

When building your data team, consider skills that will deliver the breadth you need and programming languages and frameworks that cross roles to build strong collaboration and support.

Tooling

The evolution of tooling across these 4 processes can be confusing and expensive for businesses to navigate. As discussed, each process uses different tools but is also reliant on having a cohesive ecosystem that works together.

Many businesses unfortunately have a set of very disparate (often legacy) software suites that run their data platform that can be difficult to break away from. The investment in time and skills to even support these suites often prevent businesses from adopting a modern approach and capitalising on the value of their data. The unfortunate short-sightedness of a ‘data journey’ is to only focus on ‘what works for now’, forgetting to consider how you will get to your final destination. Your ultimate success in getting data to work for you requires you to future proof your tooling and have an ecosystem that grows with you.

Today’s best data platforms are without question public cloud-based platforms. They deliver big data capability fast, efficiently and cost-effectively. They also allow you to pick-and-choose the right service on your data journey without paying for features you don't yet need. If you are starting your journey today, the other key principle in tooling is ‘serverless’. Do not pay for fixed infrastructure, only pay for what you use.

Take AWS, the world's largest cloud provider - within the same ecosystem you can support all data processes and activities of your organisation.

- - Data Lake (S3)

- - Data Governance (LakeFormation)

- - ETL/Data Wrangling (AWS Glue, SageMaker Data Wrangler)

- - Data Catalog (AWS Glue)

- - Big Data Query (Athena)

- - Machine Learning (SageMaker)

- - Serverless Data Warehouse (RedShift Serverless)

- - Dashboards, Charts, KPIs (QuickSight)

- - AI Services (Rekognition, Textract, Translate, Transcribe, Comprehend, Poly, Lex, Personalize, Forecast)

- - Streaming Data and Monitoring (Amazon Kinesis, OpenSearch)

- - Data Ingestion (AWS IoT, Amazon Kinesis, DataSync, DMS)

- - Application Development, Integration & Workflow (Lambda, API Gateway, Step Functions)

- …trust me, I could go on.

Not only are all your bases covered from a data platform perspective, but all services are underpinned by the same first-class security tooling such as centralised policies (IAM), compliance (SCPs, AWS Config) and cybersecurity (GuardDuty) as all your other IT workloads.

It’s hard to argue for the benefits of using bespoke third-party solutions for each component of your data platform against a unified serverless DevSecOps managed cloud ecosystem. The sheer effort to manage, maintain skilled staff, integrate and secure (et al), well exceeds the benefits over any given feature-by-feature comparison.

When choosing your tooling, consider how flexible, secure, capable, cost-effective, manageable, accessible, automated and serverless your platform is.

Looking for support with mastering the next step? Our Adelaide-based team of data experts are here to help.

Contact us and let’s chat about what you’d like to do with your data.